Recently, Meta’s plan to launch AI chatbots capable of initiating conversations has drawn considerable attention. It’s seen as a significant step forward, promising to transform virtual assistants from passive tools into truly helpful interactive partners. Now, newly leaked internal documents provide a detailed and transparent look at the entire process—from strategic goals and complex training pipelines to user-centered control mechanisms.

Solving Real-World Usefulness: Making AI More Helpful

One of the biggest limitations of today’s AI assistants is their interruptive, episodic nature. Each conversation typically starts from scratch; users must constantly reintroduce context, and the AI has little to no “memory” of past interactions. This reduces both effectiveness and naturalness.

Meta’s proactive AI functionality aims to solve this problem head-on. By allowing the AI to remember and track context over time, conversations become smoother and more practically valuable. Instead of just reacting, AI can now offer timely suggestions. For example:

- In personal planning: If you once discussed birthday gift ideas for a friend and mentioned their love for books, the AI might later message, “I remember you said your friend loves reading. I just found some discount codes from online bookstores—would you like to check them out?”

- In work or study: After you’ve looked into a complex topic, the AI might send you a related deep-dive article the next day to help you stay in the flow without interruption.

- For hobbies: If you often talk about cooking, the AI might suggest a new recipe that matches your taste, acting like a genuine companion.

This shift makes interaction with AI more fluid and natural.

Behind the Scenes of “Project Omni”: A Meticulously Engineered Training Process

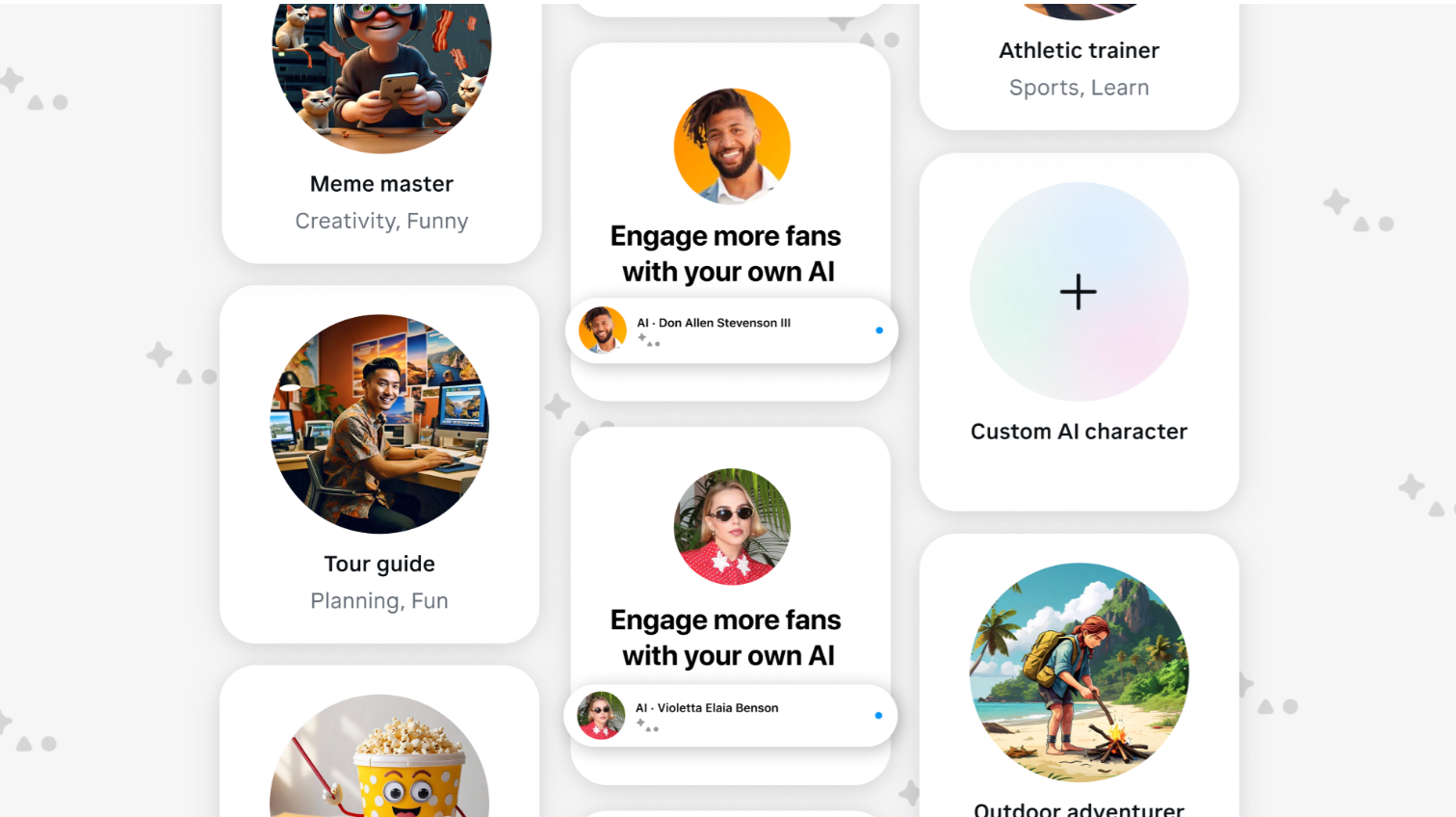

To enable these seamless and helpful interactions, Meta has undertaken an extremely complex development process. Leaked documents reveal that the project is internally dubbed “Project Omni,” with a clear mission: improve re-engagement and user retention.

To achieve this, Meta partnered with Alignerr, a data labeling company. A large team of contractors was hired for a core task: generating and refining thousands of sample conversations. These real-world data sets were then used to train Meta AI Studio’s language models.

The training pipeline is tightly controlled to shape the AI’s “personality”:

- Scenario Scripting: Staff created simulated dialogue scenarios where the AI would initiate conversation naturally and contextually.

- Maintaining a Positive Tone: One of the top priorities is for all conversations to maintain a “positive, optimistic, and conversational tone.” The AI is shaped to be a friendly, curious companion—not a cold, robotic entity.

- Establishing Safe Boundaries: Clear guidelines instruct the AI to avoid sensitive topics such as politics, religion, or medical advice. The AI may only engage in these areas if the user brings them up first—and even then, must respond with extreme neutrality.

This careful and manual training process demonstrates Meta’s serious investment in safety and quality, laying a solid foundation for trustworthy interactions.

Designed for the User: You’re Always in Control

Recognizing that no one wants to be bothered by technology, Meta has built intelligent operational rules to ensure that users remain in control at all times. Thanks to the rigorous training process above, these rules are realistically enforceable.

- Waiting for a Green Light: The most important rule is that the chatbot is only allowed to initiate conversations after the user has sent at least five messages within a 14-day window. This serves as a clear safety threshold, ensuring the system only engages when sustained interest from the user is evident. You give the green light first.

- Simple and Effective “Off Switch”: The second rule is even more powerful—if you ignore the AI’s first proactive message, it won’t send any more. This is a mechanism of absolute user respect. With the simplest action—doing nothing—you can clearly signal that you’re not interested in continuing.

These rules reflect a sound design philosophy: features are built to serve, not annoy. By giving users simple yet powerful control tools, Meta is working to build trust and ensure the AI experience is always positive.

A Promising Direction for the Future

When all the pieces come together, we see a comprehensive and responsible strategy from Meta. They’re not just developing a powerful AI feature—they’re investing heavily in human-guided training to ensure safety and quality, while embedding mechanisms that hand full control to the user.

The combination of proactive support, guided training, and user empowerment points to a promising direction. It signals a future where AI can be more seamlessly and effectively integrated into our daily lives—making things a little easier, while ensuring we remain firmly in control of the conversation.

Leave a Reply