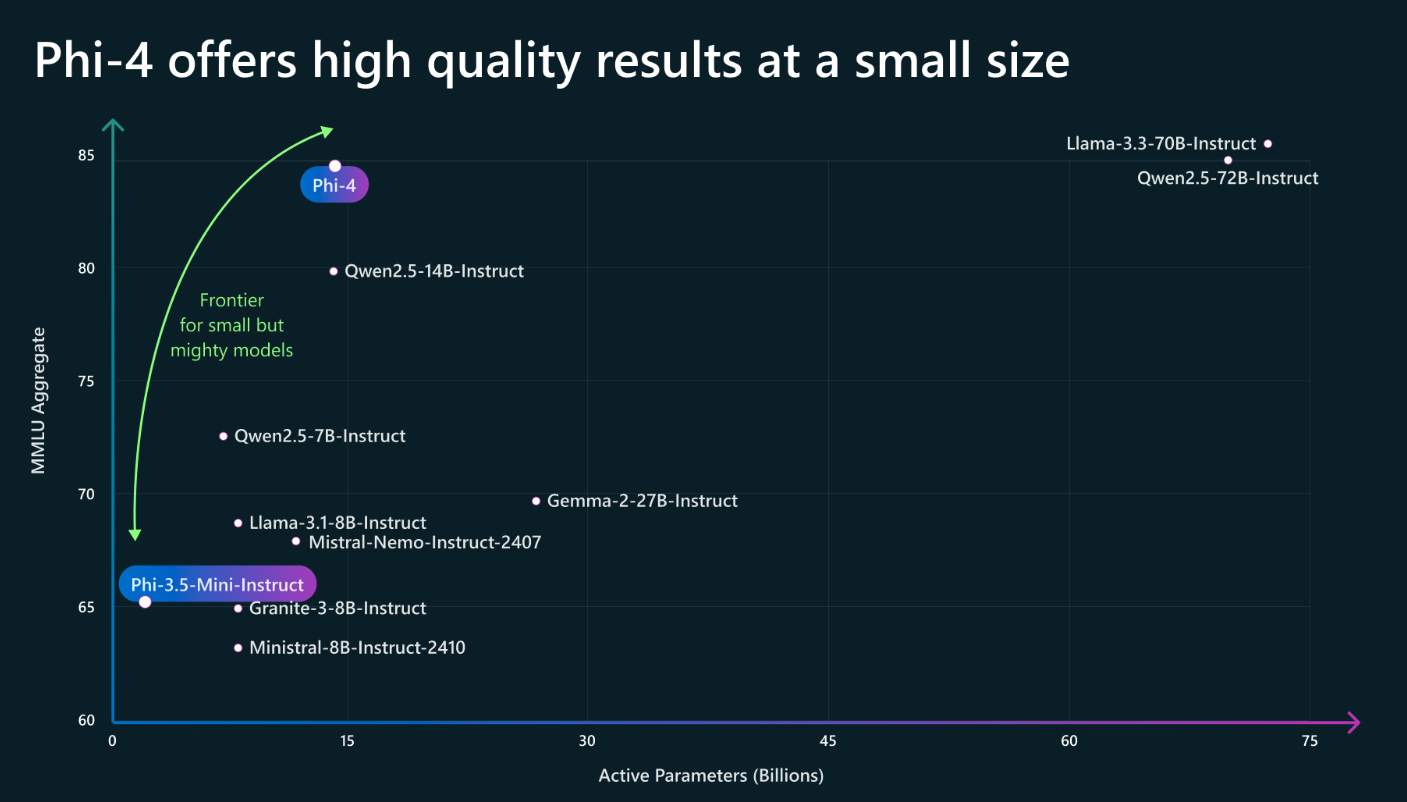

Microsoft has launched Phi-4, its latest Small Language Model (SLM), designed to excel in complex reasoning and language tasks while maintaining impressive efficiency. Building on the success of previous Phi models, Phi-4 aims to push the boundaries of what’s possible with smaller, more accessible AI.

Unlike Large Language Models (LLMs) which are often computationally intensive, SLMs like Phi-4 are designed to be faster, more cost-effective, and easier to deploy across various platforms, including resource-constrained devices. Phi-4 leverages innovative training techniques, focusing on high-quality data, to achieve a remarkable level of performance despite its smaller size.

This focus on quality over quantity is evident in Phi-4’s ability to tackle challenging tasks such as code generation, math, and logical reasoning with accuracy that rivals much larger models. This is achieved by exposing it to diverse types of training data and careful alignment. This capability expands the scope of AI applications, making it viable for use cases where large LLMs are impractical.

Phi-4 also emphasizes its ability to learn from a smaller number of examples. This efficiency is key for real-world applications where gathering huge datasets is challenging. It demonstrates that powerful AI does not always require massive scale, leading to more accessible and sustainable solutions.

Phi-4 marks a significant step in developing efficient and capable AI solutions. Its ability to perform complex tasks while maintaining a smaller footprint positions it as a key technology for a wide range of AI applications, from chatbots and intelligent assistants to embedded systems and edge devices. Microsoft’s focus on innovation in efficient AI suggests a promising future for accessible and powerful AI technologies.

You can experience Phi 4 at https://ai.azure.com/explore/models?selectedCollection=phi

Leave a Reply